TL;DR We propose a training-freeimage editing and generation method with personalized concepts. In inference time,

we combine LoRA adapters trained for different subjects, with non-overlapping subject priors.

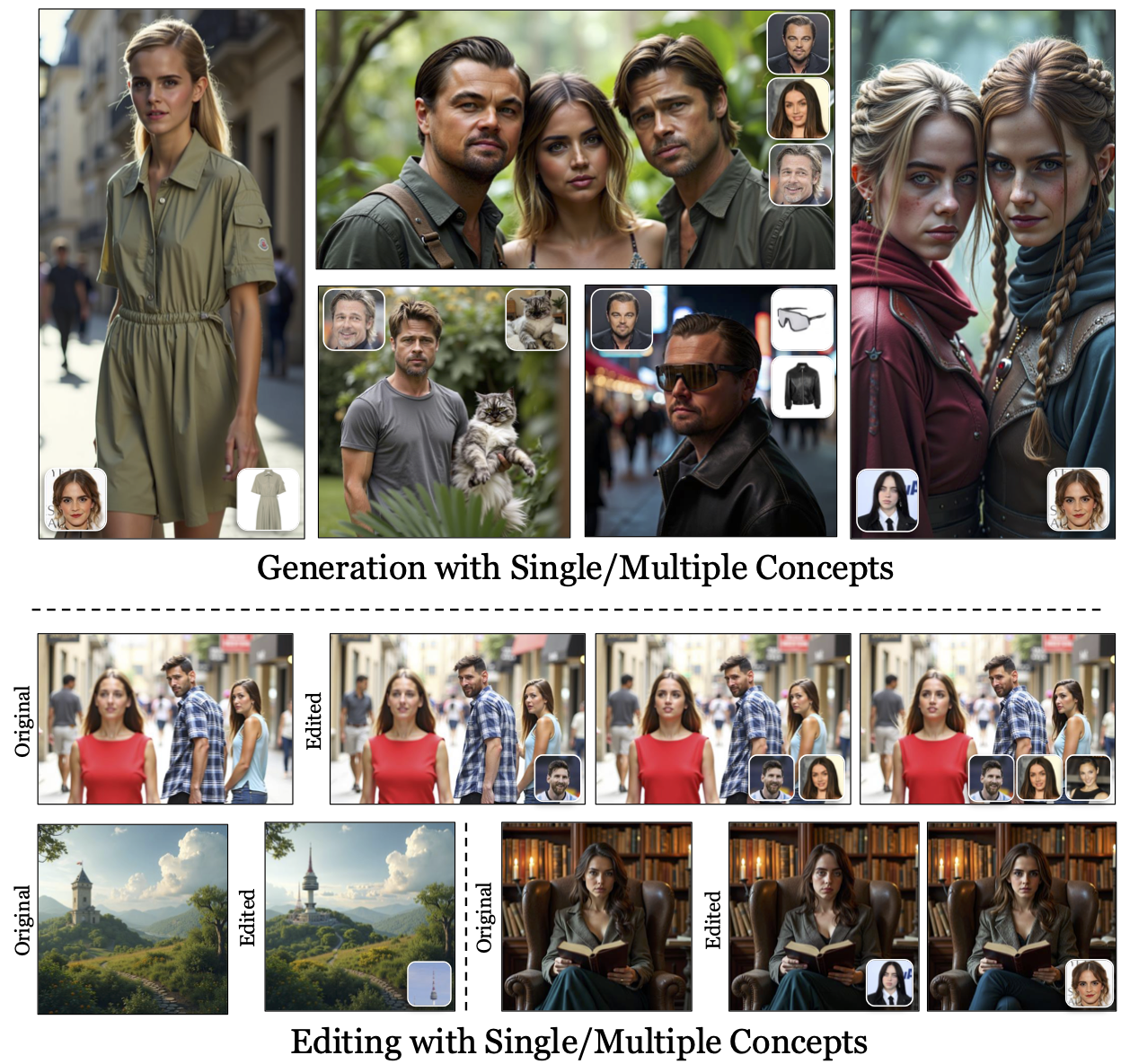

We present LoRAShop, a training-free framework enabling the simultaneous use of multiple LoRA adapters for generation and editing. By identifying the coarse boundaries of personalized concepts as subject priors, we allow the use of multiple LoRA adapters by eliminating the "cross-talk" between different adapters.

Abstract

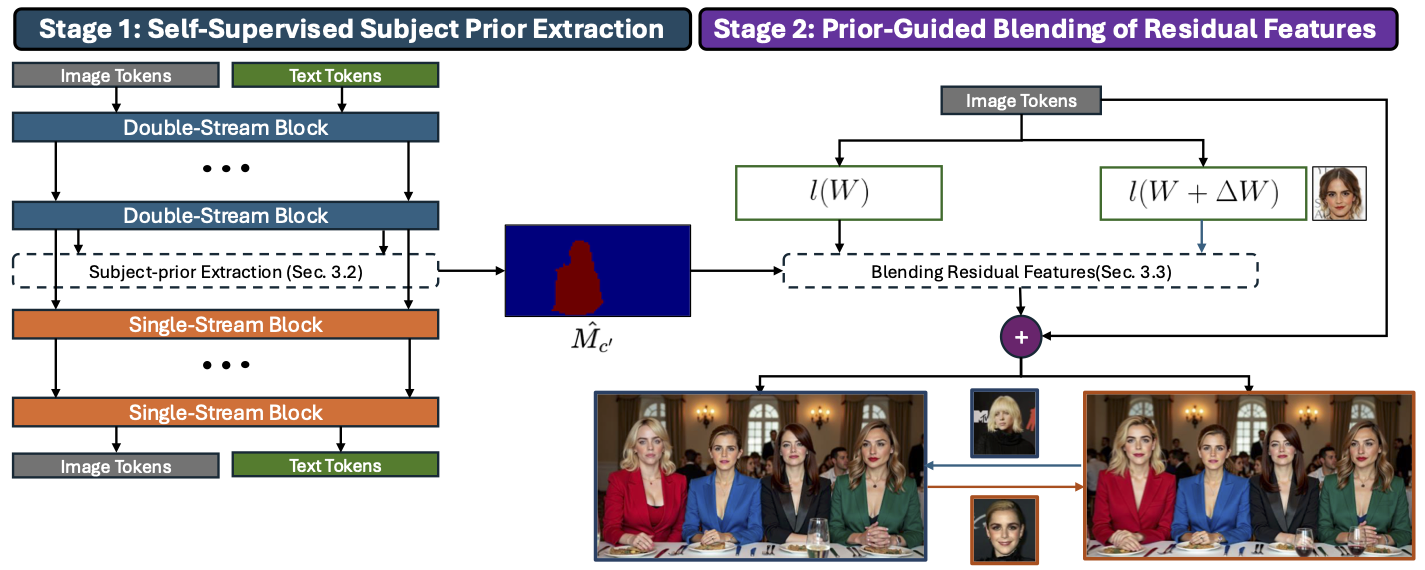

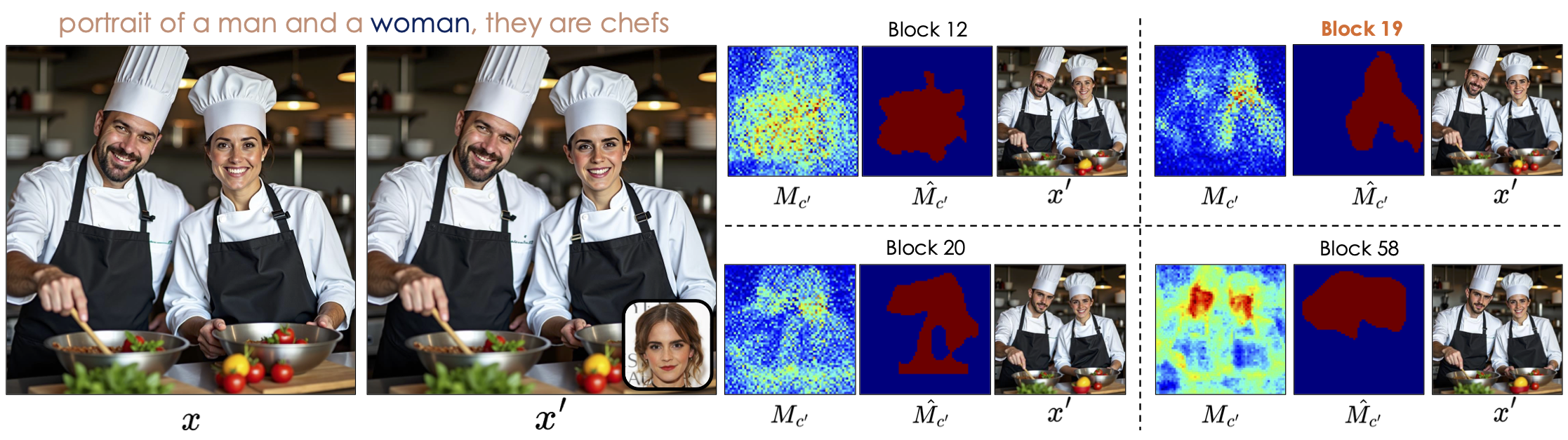

We introduce LoRAShop, the first framework for multi-concept image editing with LoRA models. LoRAShop builds on a key observation about the feature interaction patterns inside Flux-style diffusion transformers: concept-specific transformer features activate spatially coherent regions early in the denoising process. We harness this observation to derive a disentangled latent mask for each concept in a prior forward pass and blend the corresponding LoRA weights only within regions bounding the concepts to be personalized. The resulting edits seamlessly integrate multiple subjects or styles into the original scene while preserving global context, lighting, and fine details. Our experiments demonstrate that LoRAShop delivers better identity preservation compared to baselines. By eliminating retraining and external constraints, LoRAShop turns personalized diffusion models into a practical `photoshop-with-LoRAs' tool and opens new avenues for compositional visual storytelling and rapid creative iteration.

Method

LoRAShop enables multi-subject generation and editing over a two-stage training-free pipeline. First, we extract the subject prior M̂c', which gives a coarse-level prior on where the concept of interest, c', is located. Following, we introduce a blending mechanism over the transformer block residuals, which both enables seamless blending of customized features and bounds the region-of-interest for the LoRA adapter utilized.

Extraction of Subject Priors

Upon investigation of transformer blocks, we observe that the last double-stream block of FLUX provides text-image attention maps, that can separate different entities effectively.

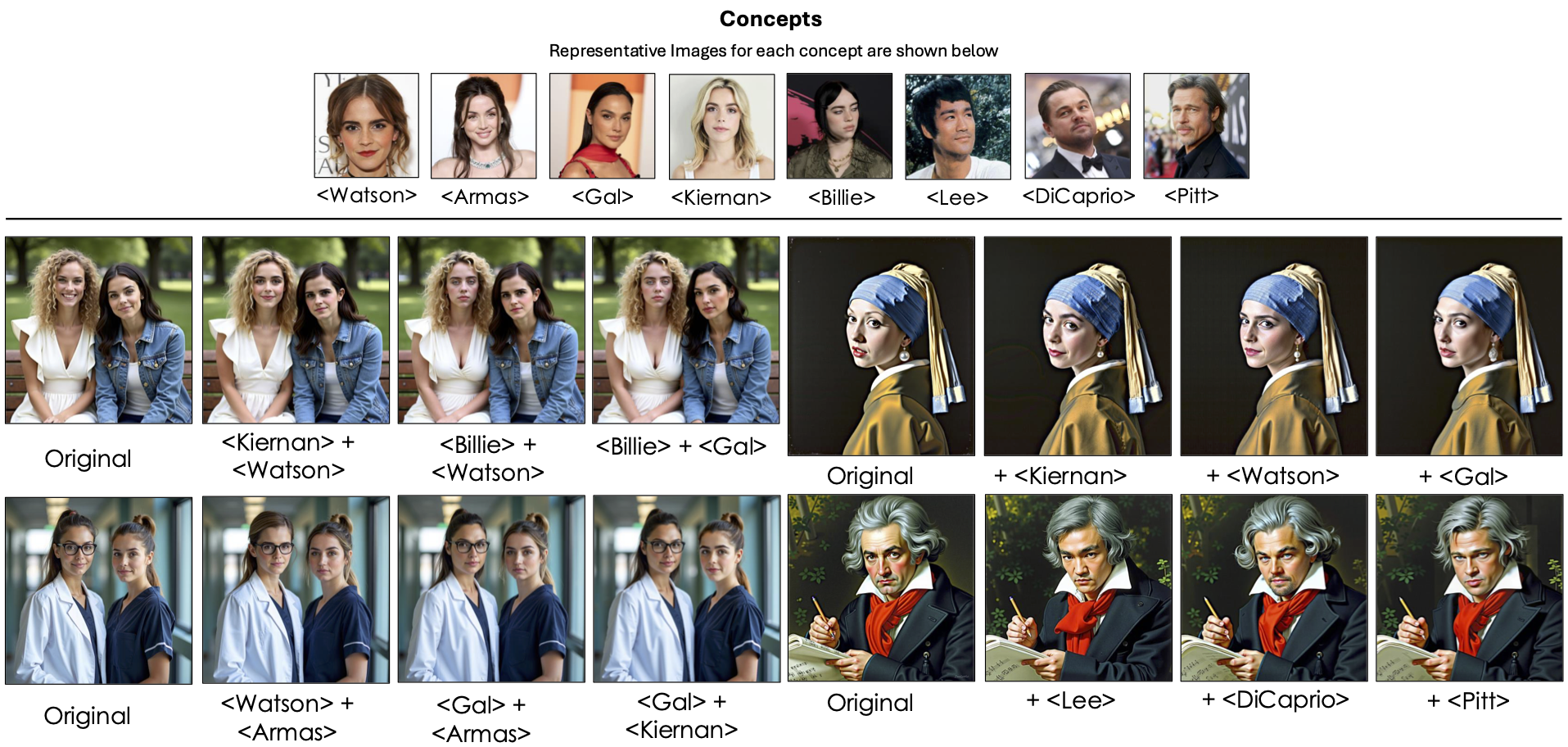

Editing Results

Personalized Editing with Single/Multiple Concepts

Over real/generated images, our method is able to target the individual concepts, and able to perform the desired personalized edits on them.

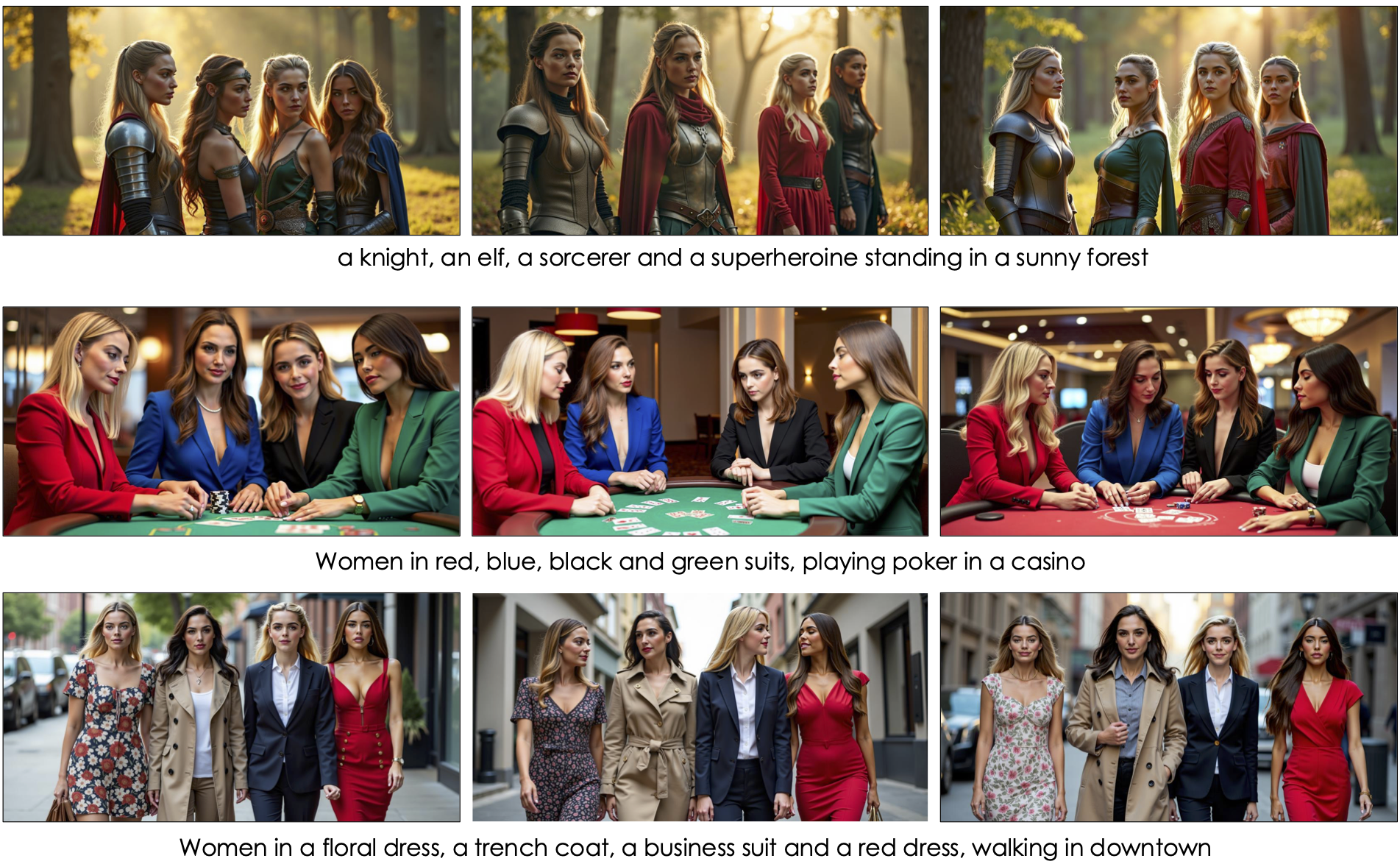

Generation with Multiple Concepts

Generation with Four Concepts

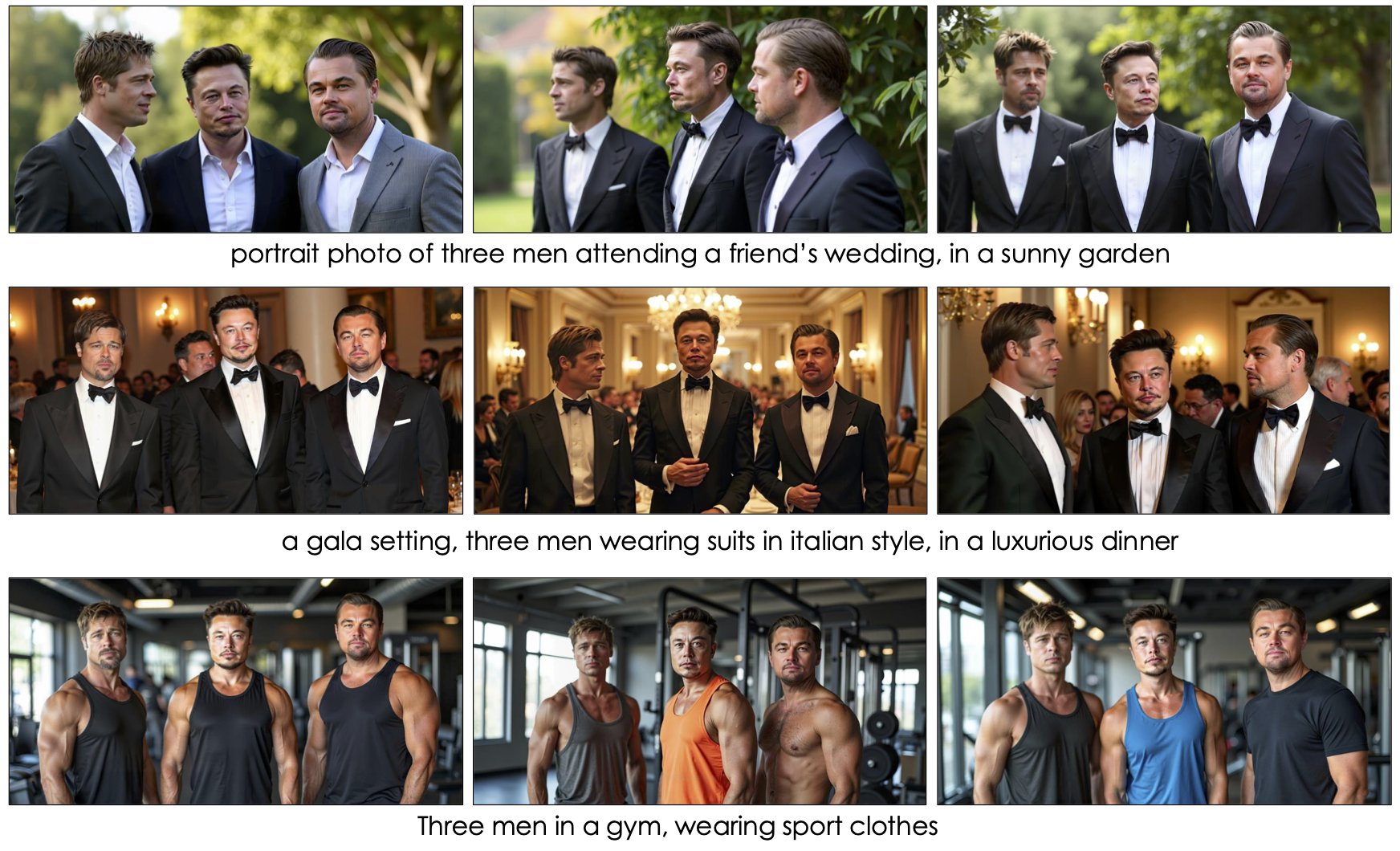

Generation with Three Concepts

Generation with Concepts from Different Domains

In addition to editing, our method is able to perform generation with multiple personalized concepts, which can be the same kind (e.g. person) or different (e.g. person and clothing).

BibTeX

@misc{dalva2025lorashop,

title={LoRAShop: Training-Free Multi-Concept Image Generation and Editing with Rectified Flow Transformers},

author={Yusuf Dalva and Hidir Yesiltepe and Pinar Yanardag},

year={2025},

eprint={2505.23758},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.23758},

}